is a project that explores mixed reality expression in autonomous systems, bridging human-AI interaction within open-ended creative contexts. Despite continuous advancements in the technical complexity and capabilities of intelligent creative systems, research into collaboration with such systems in these open-ended environments has only recently started to gain attention.

Our work adopts an “off-the-shelf” methodology,leveraging previously developed and open-sourced components to propose an augmented reality human-AI performance system, while reflection on practice-based insights.

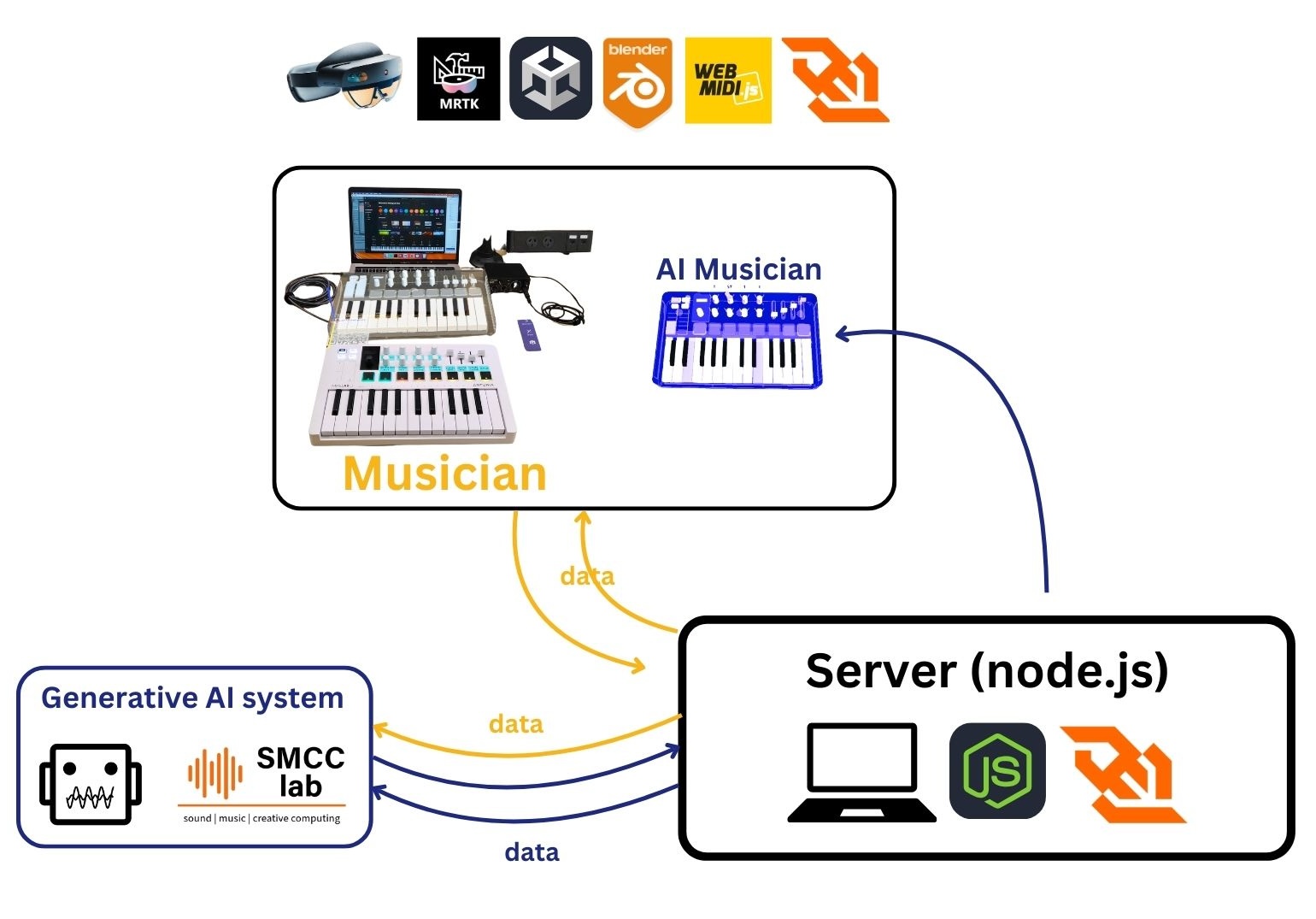

The performance system comprises two research-focused components: our minimal intelligent music controller, the GenAI MIDI Plug (impsy), and our mixed-reality musical interface arMIDI. These components communicate with each other via a network infrastructure to create a dynamic, interactive performance environment.