is a mixed reality interface that explores how augmented reality (AR) can enhance musician’s awareness and mutual engagement in collaborative electronic music-making. The system presents a head-mounted AR visualisation of a generic electronic music keyboard, inspired by Artural MiniLab 3. The interfaces visualises each musician’s hand positions, eye gaze and MIDI interaction on the keyboard in real-time through a WebSocket network infrastructure.

The project addresses a wicked issue in digital musical instruments: the challenges of effective communication and engagement due to limited visibility and reduced gestural or non-verbal interaction. These constraints can hinder musicians’ situational awareness, making cohesive performances more difficult to achieve.

The interface was developed in Unity in C# with Microsoft MRTK for HoloLens 2, and the 3D model was created in Blender.

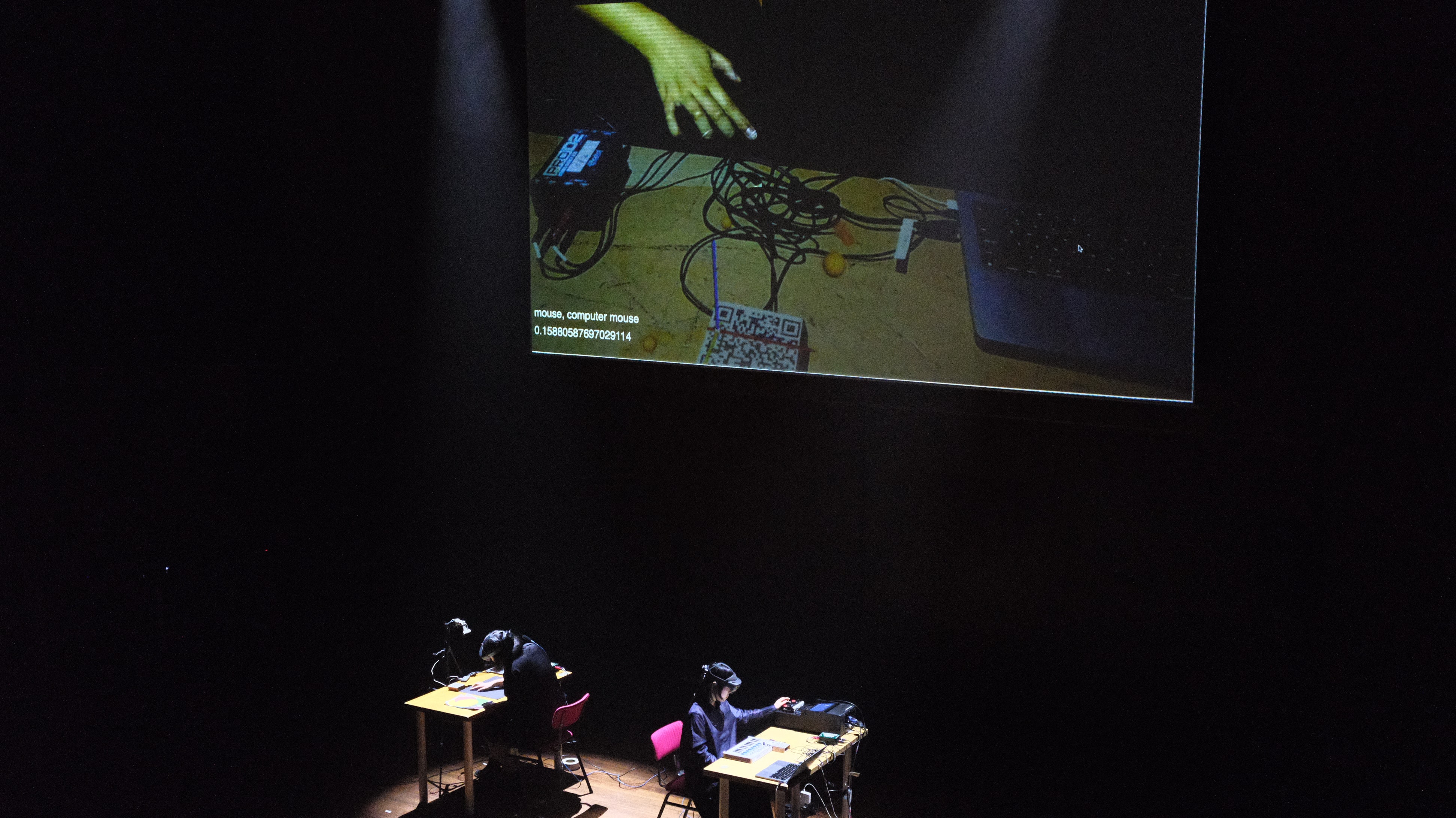

On the back end, musical interaction (MIDI) data from each performer’s keyboard and computer was captured by a Node.js application and transmitted to the other performer’s headset via a server using WebSocket messages. The virtual keyboard appears next to each musicians’ physical electronic instrument [e.g., MiniLab 3], and can be anchored in space using a QR code tag.